LLM Prompting for Software Development

Over the past couple of years, I've turned to my trusty sidekick, ChattyG, to craft a variety of projects:

1. ParentWrap, A parent email summarizer (Express, PostgreSQL, TailwindCSS).

2. Repth, An AI cycling coach, currently coaching over 500 elite-level cyclists (Express, PostgreSQL, WebSockets, Redis, TailwindCSS, Strava API).

3. Vamaste, An AI moving checklist app (NextJS, Shadcn, Prisma, Clark, PostgreSQL).

4. Brocly, An iOS app for tracking veggie intake (Swift).

5. CarPrep, An iOS app for doing a delivery inspection of a new car (Swift).

6. A whole bunch of Python scripts for, well, just about everything.

I have a secret: I have no idea what I'm doing. I'm quasi-full stack, but my expertise is in front-end development, so diving into Python and Swift was like trying to write a love letter in a language I barely spoke. Swift, in particular, was my Everest - years of attempts, yet simple apps seemed out of reach.

But I know app architecture well enough to ask the right questions. With a bit of grit and a lot of prompting, I managed to piece together apps that were not only working but were secure and scalable. None of this was possible before LLMs hit the scene.

You might wonder, "is the code any good?" That's a tough one. If an app does what it's supposed to, solving the pset it was designed for, should I lose sleep over whether the code is poetry? In my younger more impressionable years, I used to think so, but experience has taught me otherwise.

Through all this, one of the biggest lessons I've learned is how to effectively pair-program with an LLM. This experience has convinced me that LLMs aren't just handy tools; they're game changers in software development.

But every few days, I'll come across a comment like this:

This is a common refrain from engineers. There are several factors at play:

1. What are they trying to accomplish in their development work?

2. How are they prompting the LLM?

3. What is their expectation of "real software development"?

4. What are their code quality standards/expectations?

This reply gives a clue. They're asking it to do too much at once, and (rightfully) getting frustrated at the LLM "losing the plot" partway through its response.

This is a skill issue. The good news is it's an easy skill to learn!

By sharing my experience, I hope to shed some light on the not-so-talked-about part of coding: working with what you've got and learning on the fly. Let's dive deeper and see just how you can leverage an LLM to turn your own ideas into reality, one line of code at a time.

Let's dive into the art of crafting effective prompts with a hands-on, real-world example of app development. We'll start with a common mistake and refine our approach step by step, moving from less effective to highly efficient prompting.

Our journey begins with a fundamental shift in how we think about language learning models (LLMs). Instead of imagining an LLM as a seasoned developer capable of multitasking with ease, think of it as a hyper-specialist. This specialist excels in one tiny area at a time; an area you define and refine as your project progresses.

For today's lesson, we're building a to-do list. Not just any to-do list. It's a Progressive Web App (PWA) that has database storage, holds state, and supports full CRUD capabilities.

As we progress, I'll show the actual, unedited outputs from ChatGPT to highlight how each variation in our prompts makes a difference. This hands-on comparison will not only show you what changes but also why these changes matter. Let's get started and see how simple shifts in prompting can lead to significant improvements in development outcomes.

Prompt 1.0

Adopt the persona of an expert Javascript/PWA engineer. Build me a progressive web app that is a todo list. It should support CRUD functionality for tasks, and allow the user to mark them as complete, or incomplete, with a checkbox. The app should hold state, so the user can reload the app at any time, and it will remember the list. Items should disappear 5 seconds after they are checked. It should allow for user creation, stored in an sqlite database with proper password encryption.

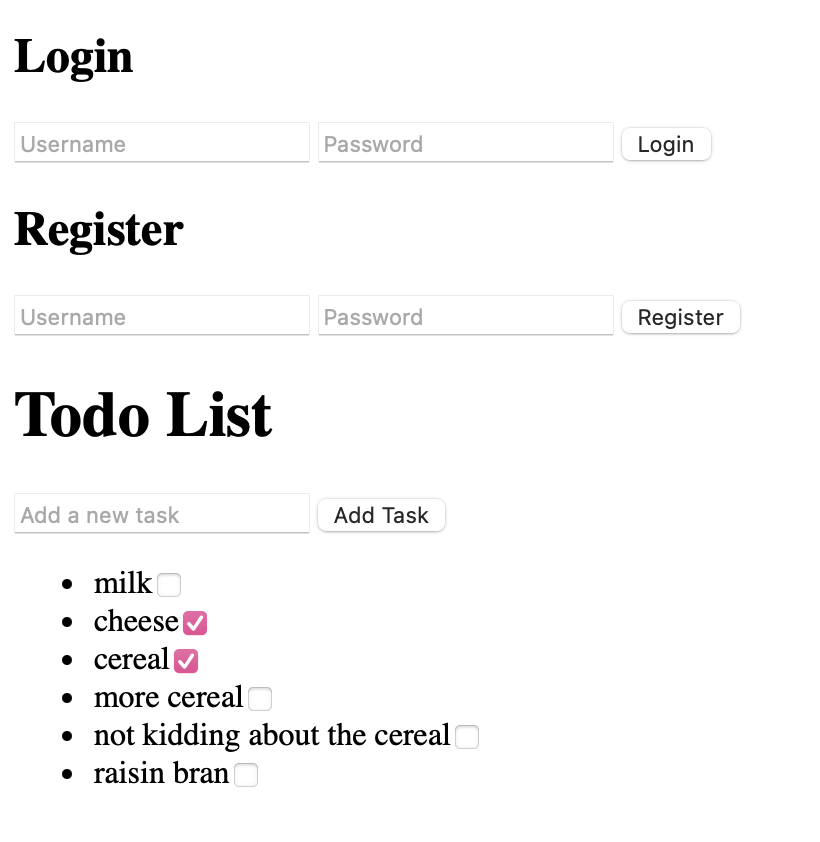

Prompt 1.0 Output

ChatGPT is diligently following your instructions, but there's a hiccup. It's trying to pack everything you asked for into a single response. As a result, the code it produces is only halfway there and acts a bit quirky.

It also is missing some functionality we asked for:

Can't update a task

Can't delete a task

Can't login (no server side code)

Can't register (no server side code)

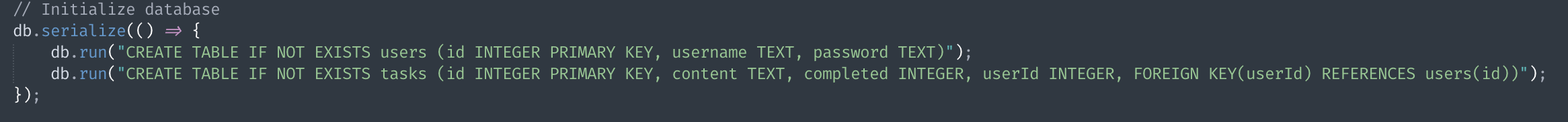

And look at how it sets up the database:

This should be a one-off task, but here it's happening every time we launch the app. In the end, we face a harsh truth: this code is unusable. This is a classic case of trying to do too much at once, and it shows why refining our prompts can make a big difference.

Let's unpack what's going wrong and learn how to steer clear of these common pitfalls.

Why do these snags appear right out of the gate? It often boils down to a tiny word with big implications: "and."

TIP #1:

Watch out for "and" in your prompts---it's a signal you might be piling on too much. Instead of bundling tasks, focus narrowly. Ask for what you need next, and only that.

TIP #2:

Be wary of being overly specific when it's not necessary. For instance, requesting the LLM to think like a Javascript/PWA engineer when dealing with a task that involves substantial HTML and CSS could actually detract from the output quality. Sometimes, less is more when setting the context.

At this stage, it should be clear: effective prompting isn't about squeezing every detail into one mega-prompt. It's about breaking down a complex prompt into manageable, digestible pieces that the LLM can handle with precision. In other words, don't describe the completed LEGO castle in your prompt; describe the piece that is part of the foundation of the LEGO castle.

Prompt 2.0

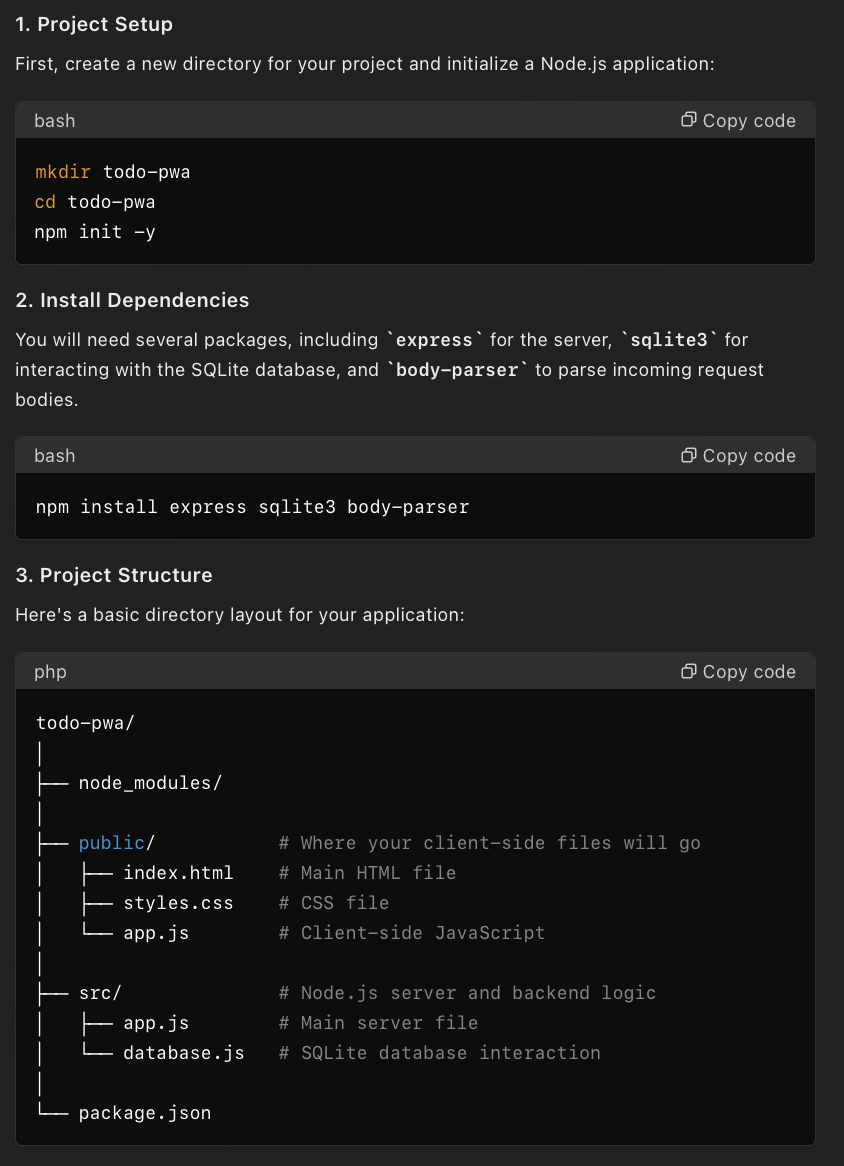

Adopt the persona of a full-stack web engineer. I am building a todo list progressive web app that uses node as a server, with sqlite to store the data. Give me the basic scaffolding to initially create this app.

Prompt 2.0 Output

This initial step is actually pretty impressive. By taking the reins on the file structure itself, ChatGPT not only nails the setup but also sets a solid context for the rest of our development. It smartly delineates between logic and data layers, avoiding the trap of initializing the database on every page load. This methodical approach lays a strong foundation right from the get-go.

Because ChatGPT invariably is locked in "helpful assistant" mode, it can overreach. It gives us the code for these files, but it's truncated, dotted with numerous // Add more database functions here as needed comments due to the output's token limits.

Here's how we can streamline this further:

TIP #3:

Be as clear about what you don't need as you are about what you do need. This precision will not only clean up the output but also ensure that ChatGPT focuses only on the essentials, enhancing the overall utility of its responses. Let's apply this insight and refine our approach to get even more tailored results.

Prompt 2.1

Adopt the persona of a full-stack web engineer. I am building a todo list progressive web app that uses node as a server, with sqlite to store the data. Give me the basic scaffolding to initially create this app. Do not generate code for any of the files, just the initial setup. I will ask you for code later.

Now ChatGPT has some breathing room. It can dedicate an entire response just to the setup, explaining what each file is for and how it works.

Now that we have our app stack completed, let's start coding.

Prompt 3.0

Using the above structure, generate the code for app.js

Prompt 3.0 Output

[dozens of errors]

A non-working app.

In a burst of enthusiasm, I asked ChatGPT to tackle app.js first, the heart of our application. Why the rush? It seemed like the most substantial part, and I thought, "Let's get this big chunk out of the way!" But here's where the strategy fails: app.js relies heavily on the existence and proper functioning of all the other files in the project. This circles us back to TIP #1: Think atomically.

Which files are truly foundational? The files that are relatively self-contained and provide the backbone for others are the ones to develop first. By assembling this foundation, we create a solid base from which the more complex parts can build on top of.

Through that lens, developing database.js first is a logical choice. It serves as the data layer that app.js will later reference. While it's not the simplest file, it is complete within itself, and the entire app's functionality pivots around the data layer.

TIP #5:

Unsure about the order of file creation? Just ask ChatGPT! Frame your query like, "We're building this app one file at a time. Which file should we generate next?" This methodical, step-by-step approach prevents oversight and ensures each component is robustly constructed before moving on.

Prompt 3.1

"using the above structure, generate the code for just database.js"

"which file should we generate after database.js?" [response: app.js]

"generate the code for just app.js"

"now just index.html"

"now just styles.css"

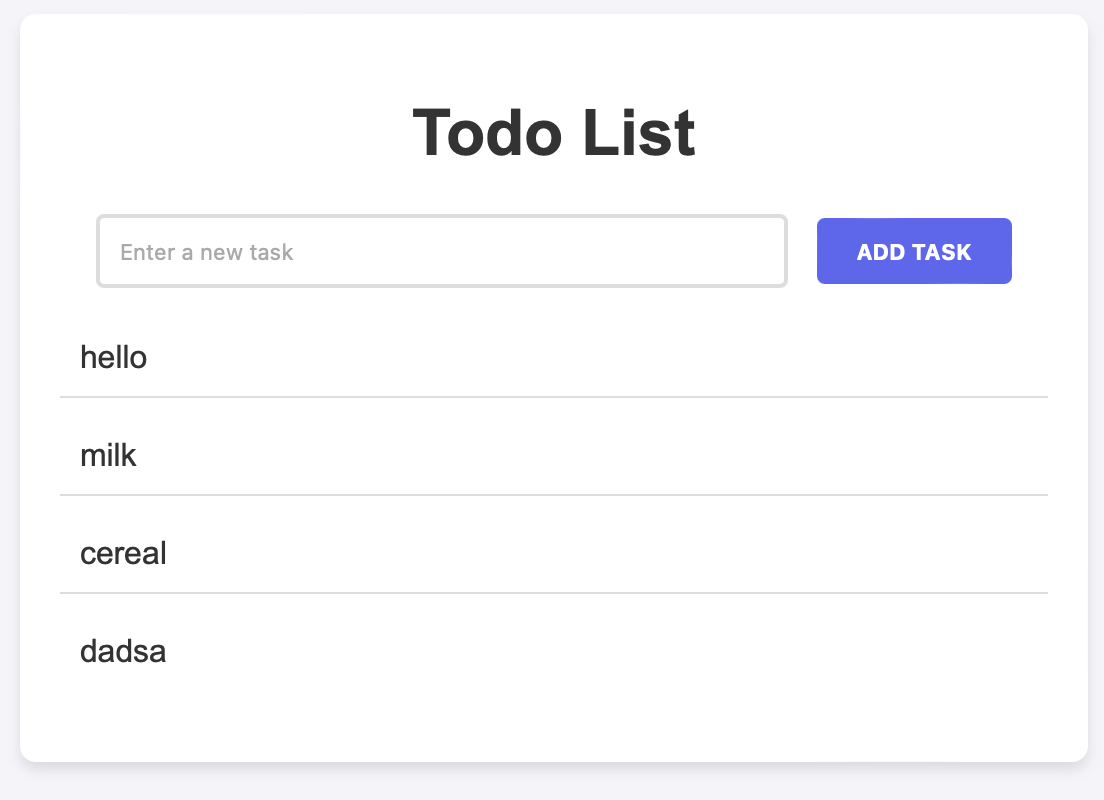

Prompt 3.1 Output

This looks pretty good, especially since I didn't mention any styling details in my prompt. But we've hit a snag: the app lacks a way to edit, delete, or mark tasks as completed!

At this point, we have a couple of paths we can take:

1. For a smaller codebase: It's straightforward. Simply identify what's missing---such as the edit, delete, or mark-as-complete functions. Mention what's missing in the prompt, and paste in all of the existing code that's been generated so far. In our example, this means index.html and app.js.

2. For a larger codebase: The approach needs to be more atomic. Instead of overwhelming the system with massive chunks of code, focus on the specific sections of the code where functionality is missing. Paste these areas of app.js and index.html in the prompt, and update accordingly.

Given that our application isn't overly complex, let's opt for the first approach. This method will allow us to quickly implement the missing features and see our to-do list app become fully functional.

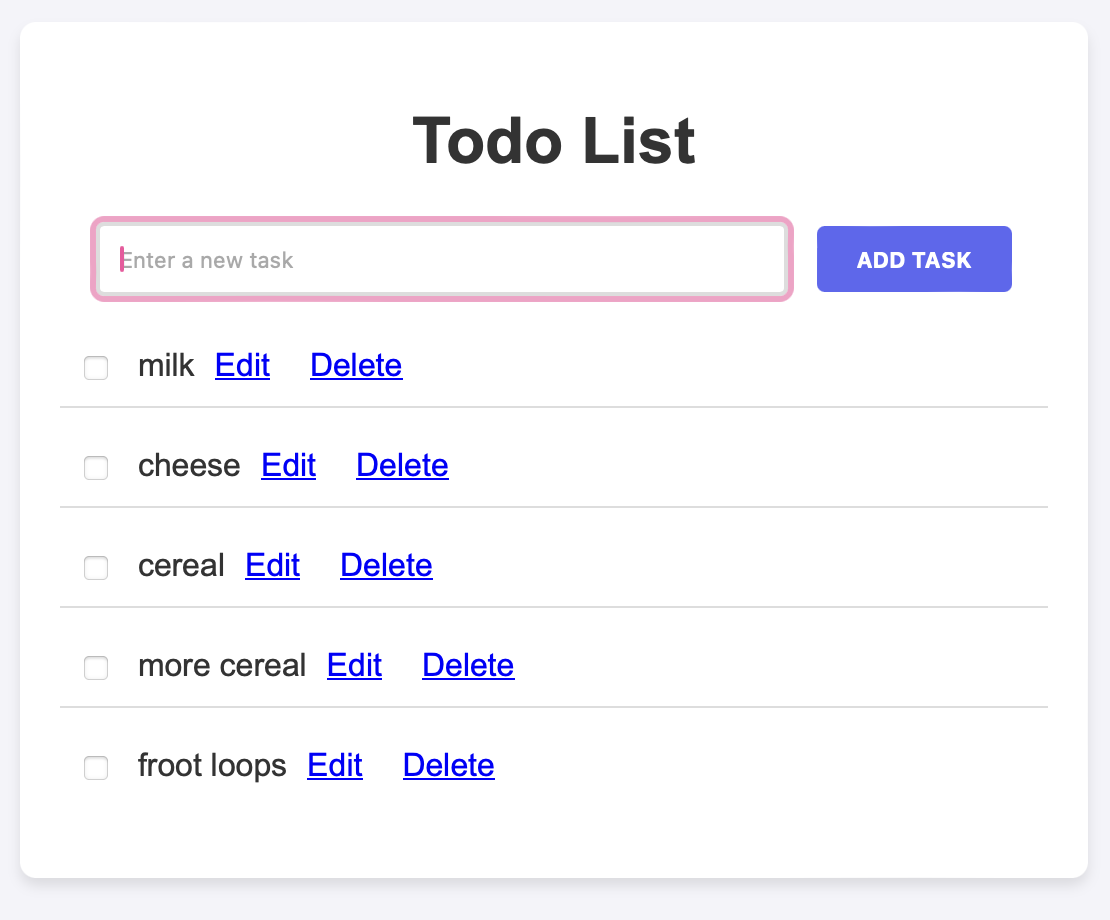

Prompt 3.2

This todo list app is missing the ability to mark a task as completed. Implement it: [index.html], [app.js]"

This todo list app is missing the ability to edit tasks. Implement it: [index.html], [app.js]"

This todo list app is missing the ability to delete tasks. Implement it: [index.html], [app.js]"

What if you're building a much more complex app with thousands of lines of code that is too big for a single response? Back to TIP #1: make your requests atomic. Ask it for a single function at a time, if you have to, then do the work of gluing the functions together to add up to an app.

Prompt 3.2 Output

At this point, we've got a fully functional todo list app that holds state, supports CRUD, and retains data in a sqlite database!

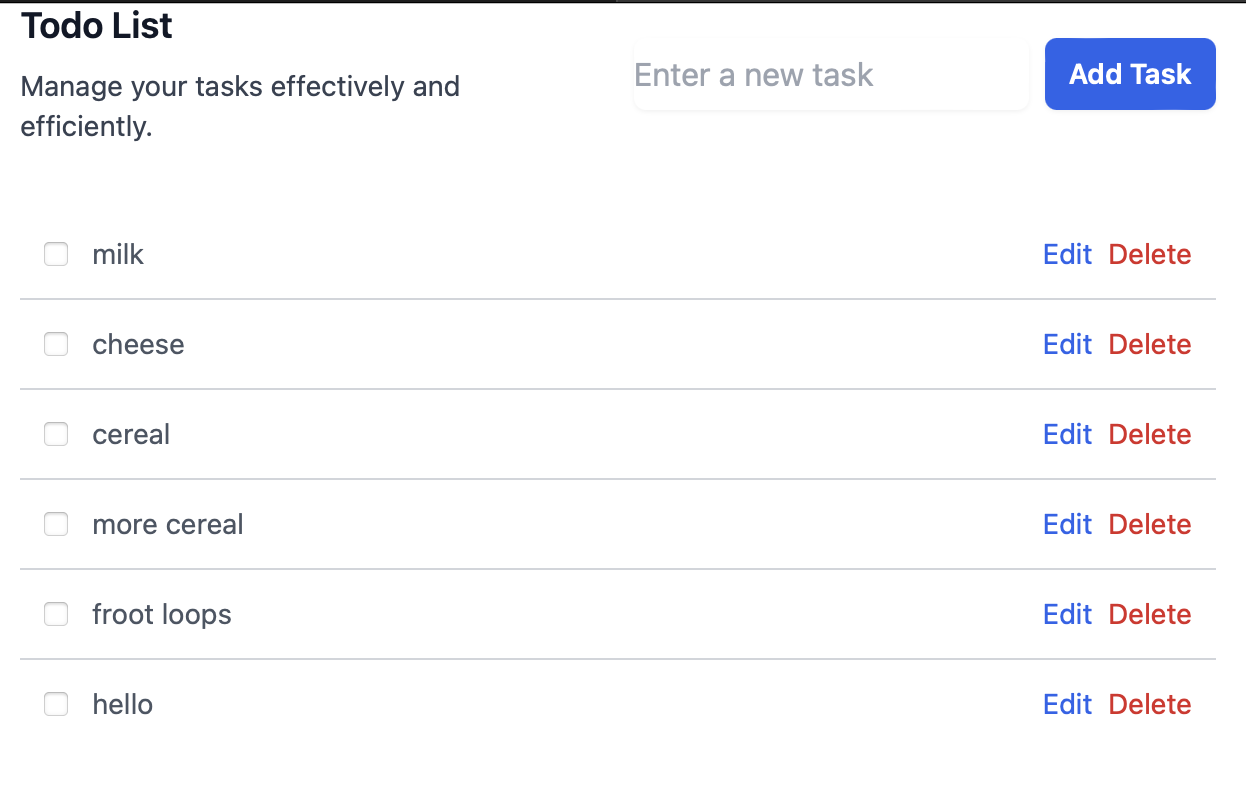

We could call it quits here, but let's do one more thing. I absolutely love TailwindCSS. It takes care of so many annoying frontend quirks. Let's reimplement the entire frontend using Tailwind classes, and do one final prompting trick to have it work well on the first try.

Prompt 4.0

I want to reimplement the front-end of my todo list app using TailwindCSS. Use this example Tailwind list example and adapt it. [paste TailwindCSS list code]

TIP #4:

Give the LLM examples of what you're looking for. LLMs are really good dot connectors, and can figure out what you're trying to achieve simply by pasting it some code snippets.

Prompt 4.0 Output

We now have a completed, mobile-friendly Tailwind TODO list app!

Tip Summary

1. Start with Atomized Prompts: Break down your requirements into the smallest possible components. This helps ensure clarity and focus, and prevents the LLM from losing track of the request.

2. Avoid Overloading Prompts: If your prompt contains "and", reconsider it. You might be asking for too much in one go. Simplify the prompt to focus on one thing at a time.

3. Use Incremental Building: Start with foundational elements of your application and gradually build up. This approach helps maintain context and improves the quality of output.

4. Specify and Despecify Wisely: Be clear about what you need but avoid unnecessary specifics that might limit the LLM's flexibility. For instance, specifying a role like a "Javascript engineer" might not be helpful if the task involves multiple technologies.

5. Iterative Refinement: Use the output as a stepping stone. Refine, add, or modify through subsequent prompts based on the initial output received.

6. Utilize Examples: Provide examples to guide the LLM. This can be particularly effective in aligning the output closer to your desired outcome.

7. Manage Complexity with Modularity: For complex systems, build one module or function at a time. This approach helps in managing dependencies and integrating comprehensive systems effectively.

8. Feedback Loop: Implement a feedback loop where you can adjust prompts based on the outputs received. This iterative interaction can significantly enhance the end results.

9. Emphasize Understanding Over Execution: Encourage the LLM to focus on understanding the task rather than just executing it. This might involve setting stages and contexts before diving into coding.

10. Leverage LLM as a Partner: Treat the LLM as a collaborative partner in development. This perspective helps in effectively utilizing its capabilities as an assistant rather than a replacement for human developers.

Additional thoughts

What about Cursor, and other IDE-LLM plugins?

In my experience, the code output simply isn't as good. I suspect these products are front-running the code with a system prompt that is too explicit, which creates unnecessary shackles that the LLM can't escape. In my experience, being more vague in one's prompt instructions (but providing good examples of what you're looking for) nets better results.

Will this change when GPT-5 is released?

Probably not. An LLM's output can only be as good as its prompt. If you prompt GPT-5 with "give me a marketing plan," its output isn't going to be measurably better than GPT-4, because it has no context. My dream would be for future GPTs to ask questions if it feels it doesn't have enough context to provide a proper answer. For example, "before I can give you a marketing plan, I want to learn a little more about what you're trying to achieve. Are you marketing a product, or service?" But a lot of what we're doing today is simulating this behavior. In many ways, multi-prompt (few shot) GPT-4 is what single prompt (zero shot) GPT-5 will be.

This article isn't just for code

You can use this advice for creating anything. If you were writing a book, you wouldn't ask it to write the whole book in a single prompt, or even a single chapter. Focus down to the paragraph if you have to. The key insight is thinking atomically.

Code sample

All code generated in this this example is provided here: https://github.com/mykeln/todo-example

Written on Jun 12th, 2024